Best Web Browser Best Web Scraping Tool

Web scraping or web data extraction is very complicated. Obtaining correct page source, parsing the source correctly, rendering JavaScript and extracting/obtaining data in a structured usable form is a difficult task. There are different tools for different users according to their needs. Here is a list of 25 best Web Scraping tools from open source projects to hosted SAAS solutions to desktop software.

Web scraping tools are used to extract data from the internet. Here is our list of the top 20 best web scraping tools for 2020. You can use to scrape web data and turns unstructured or semi-structured data from websites into a structured data set. It also provides ready to use web scraping templates including Amazon, eBay, Twitter, BestBuy, and many others. Octoparse also provides web data service that helps customize scrapers based on your scraping needs.

Yellow Pages Spider

Yellow Pages Spider is a powerful scraping tool that can search in popular yellow pages directories and scrape data like business name, complete address, web link, phone numbers, and email addresses.

Yellow Pages Spider can create a 100% customized search in the browser to target a website. After scraping and getting data from a web page, the structured scraped data is saved in a CSV file which can be imported into MS Excel or MYSQL database.

Web Content Extractor

Web Content Extractor (WCE) is a user-oriented application that scrapes and parses data from web pages. Web Content Extractor (WCE) is easy to use and provides the ability to save every

project for daily use. Web Content Extractor (WCE) exports and puts data into different formats. Web Content Extractor (WCE) excellently groups data into Excel, text, HTML formats, MS Access DB, SQL Script File, My SQL Script File XML FILE, HTTP submit form & ODBC Data source.

Web Content Extractor (WCE) is a very friendly user application and steadily grows in practical functionality for complex scrapie cases.

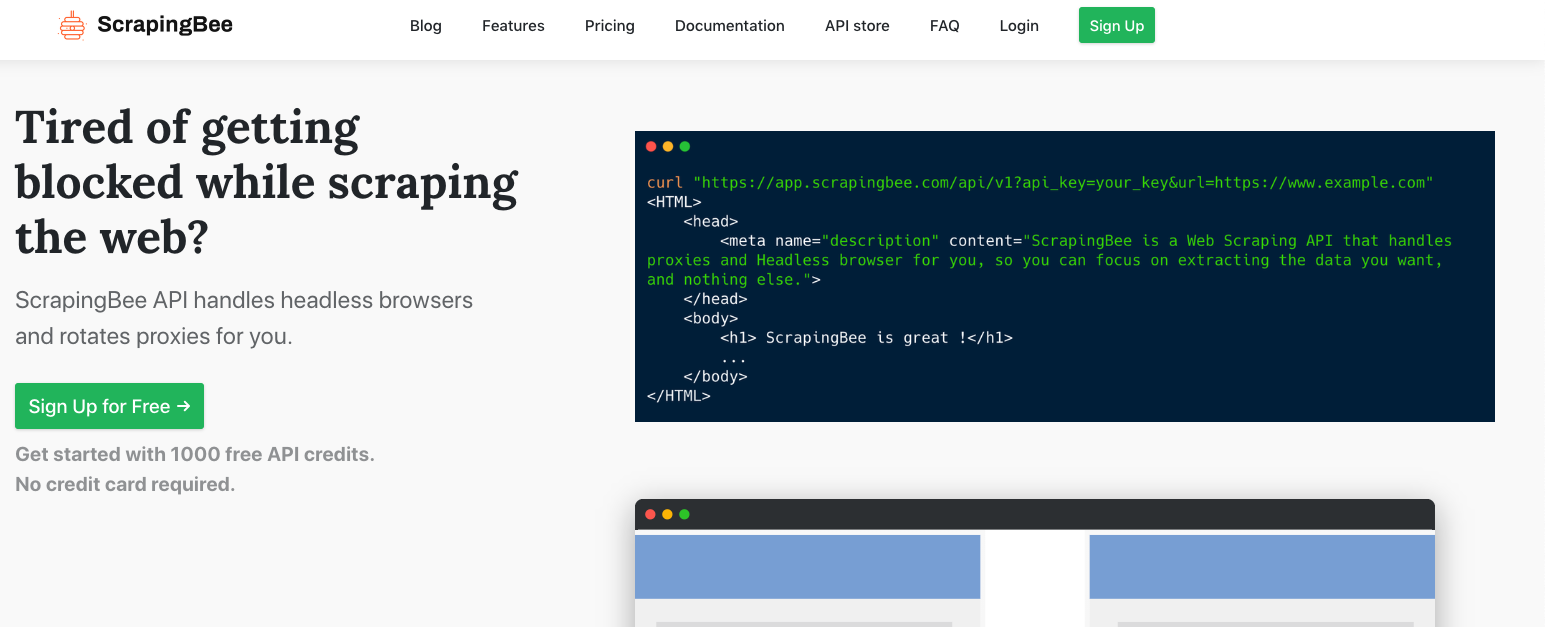

Scraper API

Scraper API is a tool to build web scrapers. Scraper API handles proxies, browsers, and captcha to get raw HTML from any website with a simple API call. It manages its own internal pool of

thousands of proxies from different proxy providers and automatically throttles requests to avoid IP bans and captchas. It is an ultimate web scraping service with special pools of proxies for e-commerce price scraping, search engine scraping, social media scraping, sneaker scraping ticket scraping and more!

ScrapeSimple

ScrapeSimple is a unique service for people who want custom scraper built for them. Web scraping is made as easy as filling out a form with instructions for what kind of data you want to scrap.

ScrapeSimple is a well & fully managed service that builds custom web scrapers. ScrapeSimple is a perfect service for those businesses that just want HTML scraper without writing code. Just

ask them to scrap information or data you need and they will design a custom web scraper to deliver the information or data.

Import .io

Import.io simply imports the data from a specific web page and exports the data to CSV format.Import.io can easily scrape hundreds of web pages in few minutes without writing a single code

and build 1000+ APIs based on customer’s requirements.

Import.io uses cutting edge techniques to scrap millions of data.

Webhose.io provides direct access to real-time structured data from creeping thousands of online web sources. Webhose.io is a unique web scraper that supports extracting web data in more

than 240 languages saving data in different formats like XML, JSON & RSS.

Webhose.io is a browser-based web app which uses exclusive data crawling technology to crawl huge data amount in a single API from multiple channels.

Dexi io

Dexi.io (formerly cloudscape) collects data from a website that doesn’t require any download like others. It has a browser-based editor to setup crawlers & extracts data in real-time. The

extracted data can be saved on cloud platforms like Google Drive, Box.net or export data as CSV or JSON.

Dexo.io supports data access by offering a set of proxy servers and hide your identity.Dexo.io store’s data on its servers for 2 weeks before archiving the data.

Scrapinghub

Scrapinghub is a cloud-based data scraping tool that helps developers to fetch structured data. Scrapinghub uses Crawlera which is a smart proxy rotator and supports bypassing bot counter-

measures to crawl bot protected sites.

Scrapinghub converts the entire web page into structured organized content/data.

Scrapinghub functions on a cloud platform termed as the Scrappy Cloud. The cloud is considered for web crawling operation to scrape data in a better way. The Scrapinghub crawlers allow users to monitor, control and route web crawlers on thousands of website pages with only a few clicks. Scrapinghub is tied with a full suite of QA tools for observing and logging web crawler activities and data.

Scrapinghub assists over 2,000 companies and millions of developers from across the globe who value accurate and reliable structured web data.

Scrapinghub uses open source libraries, such as Scrapy, PaaS for running web crawls, huge internal software libraries, including spiders for many websites, custom extractors, data post-processing, proxy management and a unique, efficient scraping service that can automatically extract data based on examples.

ParseHub

ParseHub is built to crawl single or multiple websites. ParseHub also supports JavaScript, AJAX, sessions, cookies, and redirects. ParseHub uses a machine learning technology to recognize the

complicated documents on the web and generates output files of the required data format.

ParseHub work on extraction data from the website into the local database based on customer requirement. To use Parsehub users do not need basic programming knowledge to start a friendly user-interface with very high-quality user support.

With Parsehub users can scrape Email Address Extraction, Disparate Data Collection, IP Address Extraction, Image Extraction, Phone Number Extraction, Pricing Extraction, Web Data Extraction, etc.

ParseHub is also available as a desktop application for Windows, Mac OS X, and Linux.

Scraping Bot .io

Scraping-Bot.io is an effective scraping tool to scrape data from a website or URL. It scrapes data from product pages from where it collects all client needed data like images, product title,

product price, product description, stock, delivery costs, EAN and product category, etc.

Scrapingbot.io is also used to check a website ranking on Google.

URL Profiler

URL Profiler is a powerful SEO and data scraping tool to extract/scrape and analyze bulk data from the website at the domain and URL level.URL Profiler analyzes a wide range of SEO related

tasks like content audits, backlink analysis, competitive research, and penalty audits.

URL Profiler is effective in retrieving a big array of links and social media data without limits or restrictions.

Octoparse

Octoparse is a visual web data extraction/scraping software. Users would find it easy to use Octoparse to scrape bulk data from websites. For scraping tasks no coding needed of any type. It’s

easier and faster to get & scrape structured data from the web without having the knowledge to code. Octoparse automatically extracts content/data from websites and allows them to save it in any format of the user’s choice.

Compared to other web scraping tools and data grabbers, Octoparse is more competitive. Octoparse provides a free version plan that could scrape data from every website.

OutWit Hub

Outwit hub is a Firefox extension and after installation/activation of this extension, the content/data can be scraped from websites instantly. OutWit Hub has quick “Fast Scrape” features that enable it to scrape data from URLs/websites. Scraping data from websites using OutWit Hub needs no programming skills. OutWit Hub is an excellent alternative web scraping tool to scrape a slight amount of data from websites instantly.

Diffbot

Diffbot is an efficient & accurate web data scraping extractor to mine, extract or scrape structured data from any website. Diffbot uses advanced Artificial Intelligence (AI) technology and APIs to analyze and scrape structured data. The data scraping or extraction process is completely automatic in Diffbot. The web scraping solution of Diffbot has an API that identifies and evaluates web pages.

After the Diffbot API identifies the page type, it routes the targeted page to API for data scraping.

Visualwebripper

Visual Web Ripper is a multi-featured visual data scraping tool. Visual Web Ripper automatically analyzes and collects content and scraps it. Visual Web Ripper is a user-friendly scraping tool to extract data from highly dynamic websites like AJAX websites and collects content structures such as product catalogs or search results.

DataStreamer

DataStreamer provides APIs for social media, weblogs, news, video, and live web content to users to scrape data in large volumes. DataStreamer scraping tool helps to fetch social media content from across the web. DataStreamer provides help to extract/scrape critical metadata using natural language processing. DataStreamer is a fully integrated full-text search powered by Kibana and Elasticsearch with a comprehensive admin console.

FMiner

FMiner is a data scraping tool for data scraping, data extraction, screen scraping, web harvesting, web crawling, and web macro support for windows and Mac OS X.

FMiner is a very easy to use web data scraping tool that combines unique features with an intuitive visual project design tool. FMiner uses modern data mining techniques to scrape data from websites ranging from online product catalogs, real estate classified sites to popular search engines and yellow page directories.

Apify SDK

Apify SDK is a scalable web crawling, data extraction and scraping library for JavaScript/Node.js. It enables the development of data extraction and web automation with headless chrome and puppeteer.

Apify SDK automates workflow, allows easy and fast crawling across the web, works locally and in the cloud environment and runs in JavaScript. Apify SDK NPM package provides several helper functions to run code on the Apify Cloud taking advantage of the pool of proxies, job scheduler and data storage.

Sequentum Content Graber

The Sequentum Content Graber is a powerful data scraping/grabbing tool for web data extraction. The Sequentum Content Graber allows scaling an organization. The Sequentum

Content Graber offers to use features like visual point and clicks editor.

The Sequentum Content Graber is reliable and scalable. The Sequentum Content Graber uses multi-threading to increase the performance in advance debugging, logging and error handling.

The Sequentum Content Graber extracts data faster and faster than other tools/solutions and helps to build web apps with dedicated web API that allows executing data directly from any website.

Mozenda

Mozenda allows a user to scrape and extract text, images and PDF content from websites. Mozenda helps to organize and prepare data files for publishing. Mozenda collects and publishes web data to the preferred BI tool or database. Mozenda provides a point-n-click interface to create web scraping/extraction agents in minutes. Mozenda has job sequencer and request blocking features to scrape web data in real-time.

Mozenda converts all extracted data into valuable business intelligence.

Scrapy

Scrapy is a web data scraping framework in Python to build web scrapers. Scrapy provides the user of all types of tools to scrape web data and store it in a preferred structure and format. Its

main advantage is that Scrapy is built on a twisted asynchronous networking framework.

Scrapy is used to scrape/ extract data on a large scale. Scrapy is used for data extraction, data mining, monitoring, and automated testings.

WebHarvy

WebHarvy’s visual web scraper has an inbuilt browser that scrapes/extracts data from web pages.

WebHarvy has a point to click on the web interface to select elements for grabbing. User doesn’t have to write any code for scraping. Multi-level category scraping feature is included in WebHarvy that can follow each level of category links to scrap data from pages. Users can set up proxy servers to hide IP while scraping data from a website.

WebHarvy can save data into CSV, JSON and XML files.

Cheerio.js

Cheerio is a library that analyzes HTML and XML documents to use the syntax of jQuery working with downloaded data.

To write a web scraper in JavaScript, Cheerio API makes parsing, manipulating and rendering efficiently. Cheerio is not a web browser so it does not harvest a visual rendering, apply CSS, load external resources or execute JavaScript.

Cheerio can parse any type of HTML and XML document.

80legs

Best Web Scraping Tool

80legs.com is a web data scraping and web crawling service. 80legs is powered by a grid computing architecture. This computing architecture allows 80legs to grab the fastest web scraping

data. 80legs provides users a web form, an API or an application framework to design their own crawling, grabbing, scraping processing logic. 80legs switches to link parsing, de-duplication, redirects, throttling, and some other crawling challenges.

Helium Scraper

Helium Scraper is an easy to use powerful web scraper/extractor that can grab, extract and scrape from any website. Helium Scraper has a unique interface that allows user to scrape structured and patterned data with a few clicks and more data with the help of JavaScript and SQL. Helium Scraper’s online data extraction system grabs pricing extraction, phone number extraction,

image extraction, web data extraction, etc.

Helium Scraper is a handy software for users who are mining/scraping web-page information and structured data efficiently.

Best Web Browser Best Web Scraping Tools

What is Web Scraping?